Data copied from page cache to process memory (may or may not page fault).System call copies data from disk to page cache (may or may not page fault, data may already be in page cache causing this to be skipped).If the process only does steps 2 and 3 once for each bit of data read, or the data gets dropped from memory because of memory pressure, mmap() is going to be slower. Process accesses memory for the first time, causing a page fault - expensive (and may need to be repeated if paged out).System call to create virtual mappings - very expensive.

#MEMORY MAPPED FILE SOFTWARE#

A poorly-configured RAID5 array of twenty-nine S-L-O-W 5400 rpm SATA disks on a slow, memory-starved system using S/W RAID with mismatched block sizes and misaligned file systems is going to give you poor performance compared to a properly configured and aligned SSD RAID 1+0 on a high-performance controller despite any software tuning you might try.īut the only way mmap() can be significantly faster is if you read the same data more than once and the data you read doesn't get paged out between reads because of memory pressure. reading blocksįirst, in most IO operations the characteristics of the underlying storage hardware dominates the performance. (It is possible to advise kernel in advance with madvise() so that the kernel may load the pages in advance before access).įor more details, there is related question on Stack Overflow: mmap() vs. Reading a large block with read() can be faster than mmap() in such cases, if mmap() would generate significant number of faults to read the file. If a page of the mapped file is not in memory, access will generate a fault and require kernel to load the page to memory.

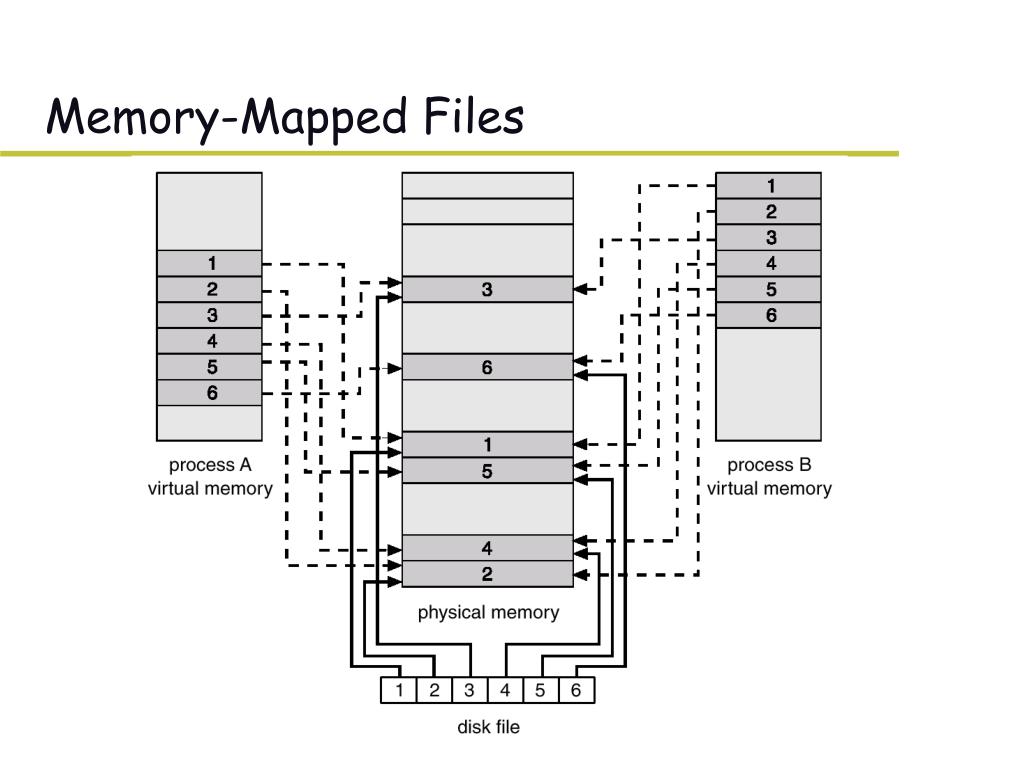

There is also no system call overhead when accessing memory mapped file after the initial call if the file is loaded to memory at initial mmap(). Using mmap() maps the file to process' address space, so the process can address the file directly and no copies are required. Kernel has to copy the data to/from those locations. Calls to read() and write() include a pointer to buffer in process' address space where the data is stored. Memory mapping a file directly avoids copying buffers which happen with read() and write() calls. How does memory mapping a file have significant performance increases over the standard I/O system calls? Then when it accesses the mapped memory, page faults happen. It takes a system call to create a memory mapping. If I am correct, memory mapping file works as following. Using the read() and write() system calls simplifies and speeds up fileĬould you analyze the performance of memory mapped file? Manipulating files through memory rather than incurring the overhead of Reads and writes to the file are handled as routine memory accesses. Read in more than a page-sized chunk of memory at a time). Read from the file system into a physical page (some systems may opt to However, a page-sized portion of the file is Initial access to the file proceeds through ordinary demand paging, Memory mapping a file isĪccomplished by mapping a disk block to a page (or pages) in memory. Lead to significant performance increases. Memory mapping a file, allows a part of the virtual address space to be logically associated with the file. Each file access requiresĪlternatively, we can use the virtual memory techniques discussed soįar to treat file I/O as routine memory accesses. System calls open(), read(), and write().

Consider a sequential read of a file on disk using the standard

0 kommentar(er)

0 kommentar(er)